Experimental Zone · No Boring Allowed

AR spells, AI creatures, light rituals, and data that dances. This is where the experiments escape the notebook and take over the room.

Creative Technology

As part of my deep dive into the forefront of creative technology, I set out to explore the latest innovations in frontend web development. Today’s creative technologists are pushing the boundaries of what’s possible by seamlessly integrating code with both software and hardware, fundamentally reimagining how we interact with computers. The interface between humans and machines is constantly evolving, opening new dimensions of interaction.

At my time at NYU, I focused on not losing sight of the story, and was able to find my voice as a creative technologist while looking for the future of media and entertainment. I love spectacle and play — especially when play becomes social. The next level is focusing on tech that bridges real and virtual worlds. Whatever tool I am utilizing from now on — within business or consumer products — I will continue to integrate spectacle, play, and push it to move beyond the screen.

✺Featured Experiments

tap anything · follow the rabbit holesLight & Control

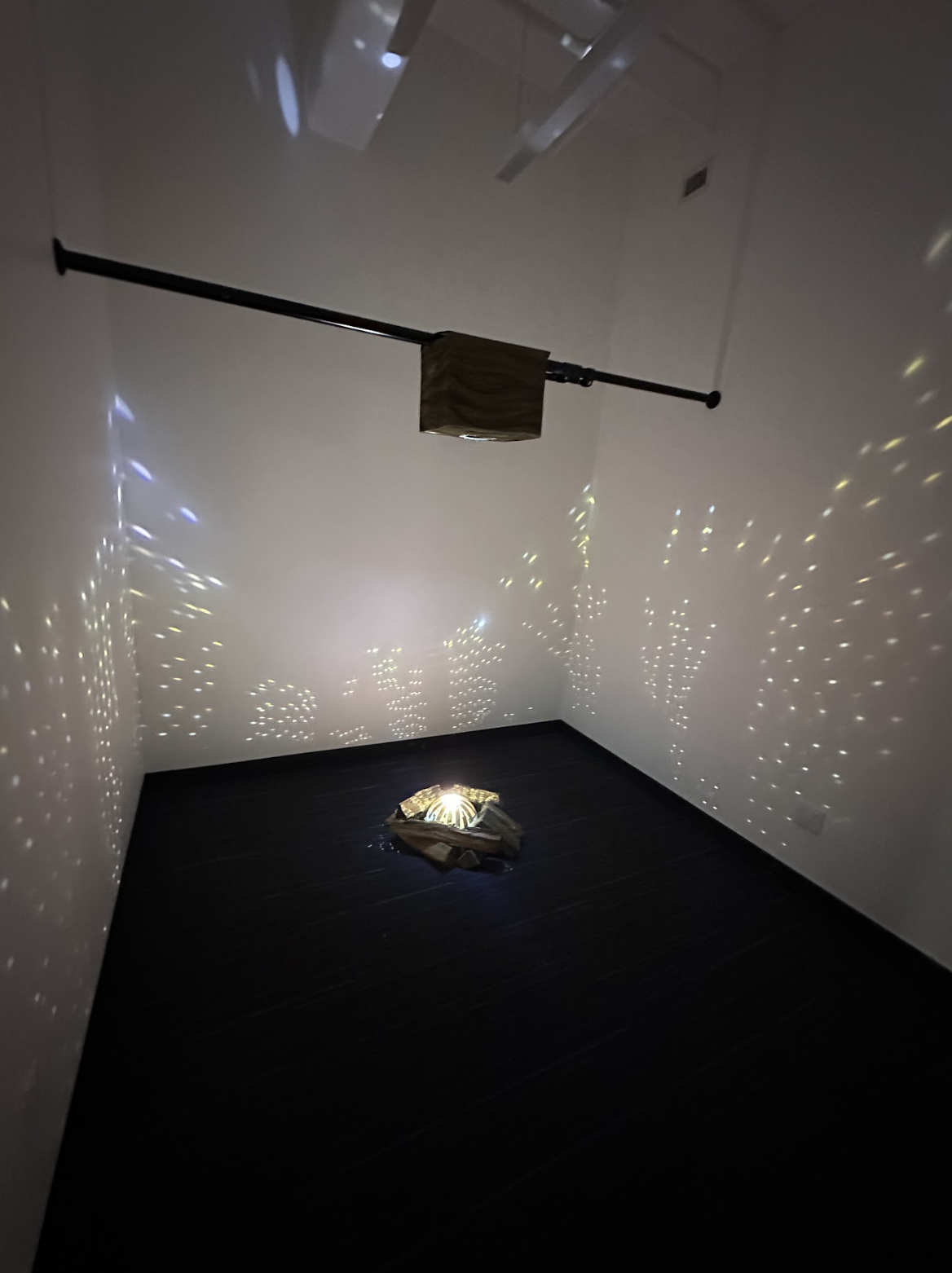

Immersive Design

- Painting the Future: Collaborative Play and AI-powered Creativity in an Interactive 3D Worldsee the world bend ↗

- Non-linear Storytelling: Peter & Wendysee the world bend ↗

- Custom particle controller for TouchDesignersee the world bend ↗

- body as UI

- AI sidekicks

- LED fog

- AR portals

Machine Learning & Data Science

spreadsheets → stories → systemsI explored datasets to analyze and model human behavior and urban dynamics — for example, evaluating how different demographics might be impacted by policy changes like congestion pricing in cities.

The goal: build data-driven narratives that highlight structural inequities — and propose solutions that are informed by real data.

AI & Computer Vision

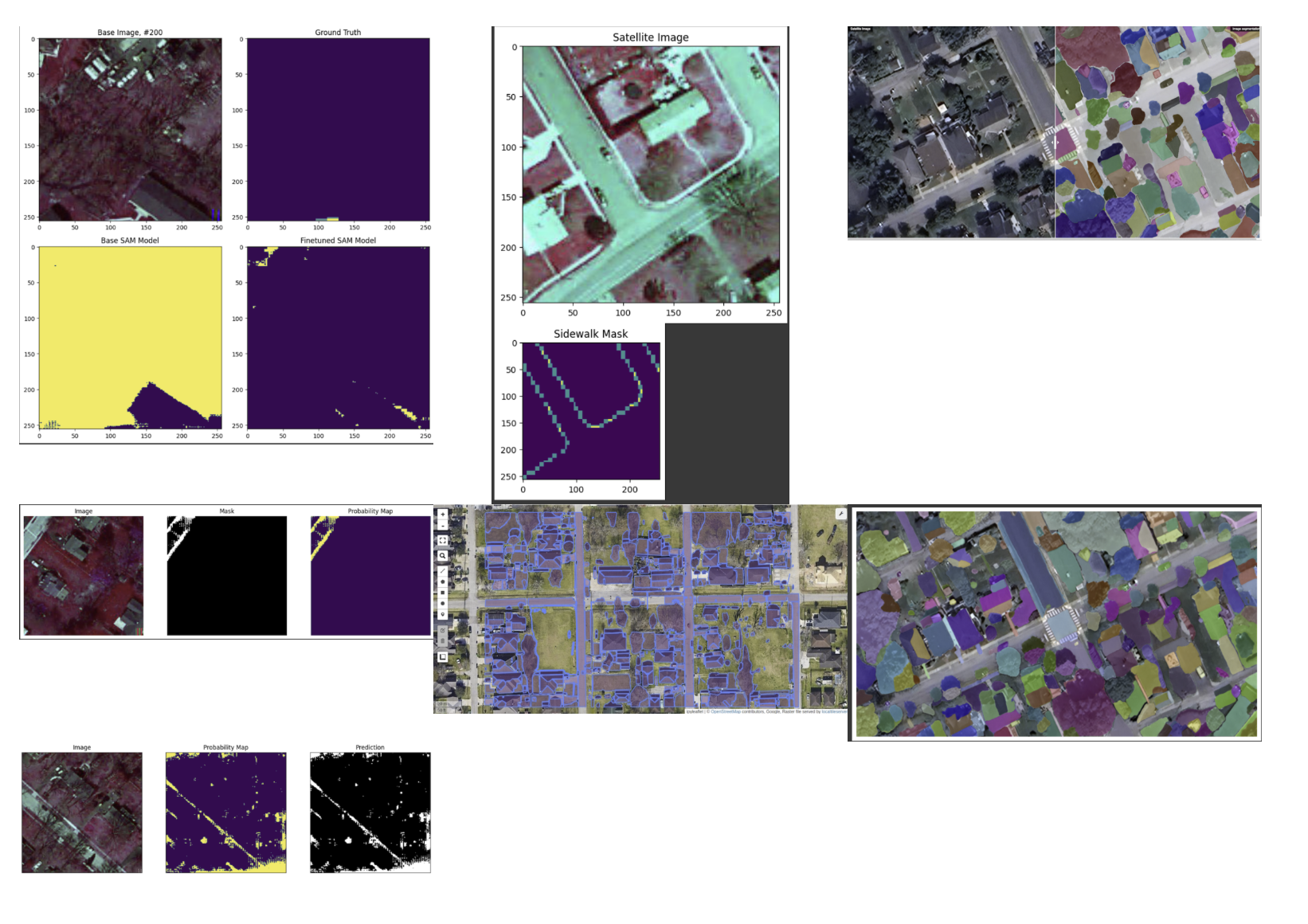

I took a deep dive into implementing various AI models and exploring the mathematical black box of AI. This included training models from datasets, using computer vision to understand pixels on maps to predict the trajectory of static position objects obscured by other objects ("sidewalks covered by trees"), image recognition, and more.

Inference identifying sidewalks in satellite imagery.

masks · overlays · probability maps

ARInteractive AR + Generative AI

Augmented Reality (AR) is rapidly reshaping the landscape of human-computer interaction, blurring the lines between the physical and digital worlds. My journey into AR involved pushing the boundaries of what’s possible with platforms like Niantic Lightship, Adobe Aero, Unreal, Snap Lens Studio, Meta Spark, and TikTok Effect House.

By rigorously testing the limits of these tools, I sought to understand and expand the potential of mixed-reality experiences — using the entire body as an interface for AR effects and storytelling. On top of that, I leveraged generative AI to create a vast array of assets: from 2D images and animated video, to intricate 3D objects — all used across these projects.

For small creative teams or independent creators, this is an exciting time. The tools and techniques are increasingly accessible. My work serves as a testament to the potential of AR + AI to revolutionize how we interact, communicate, and imagine.

Edge AI & Computer Vision

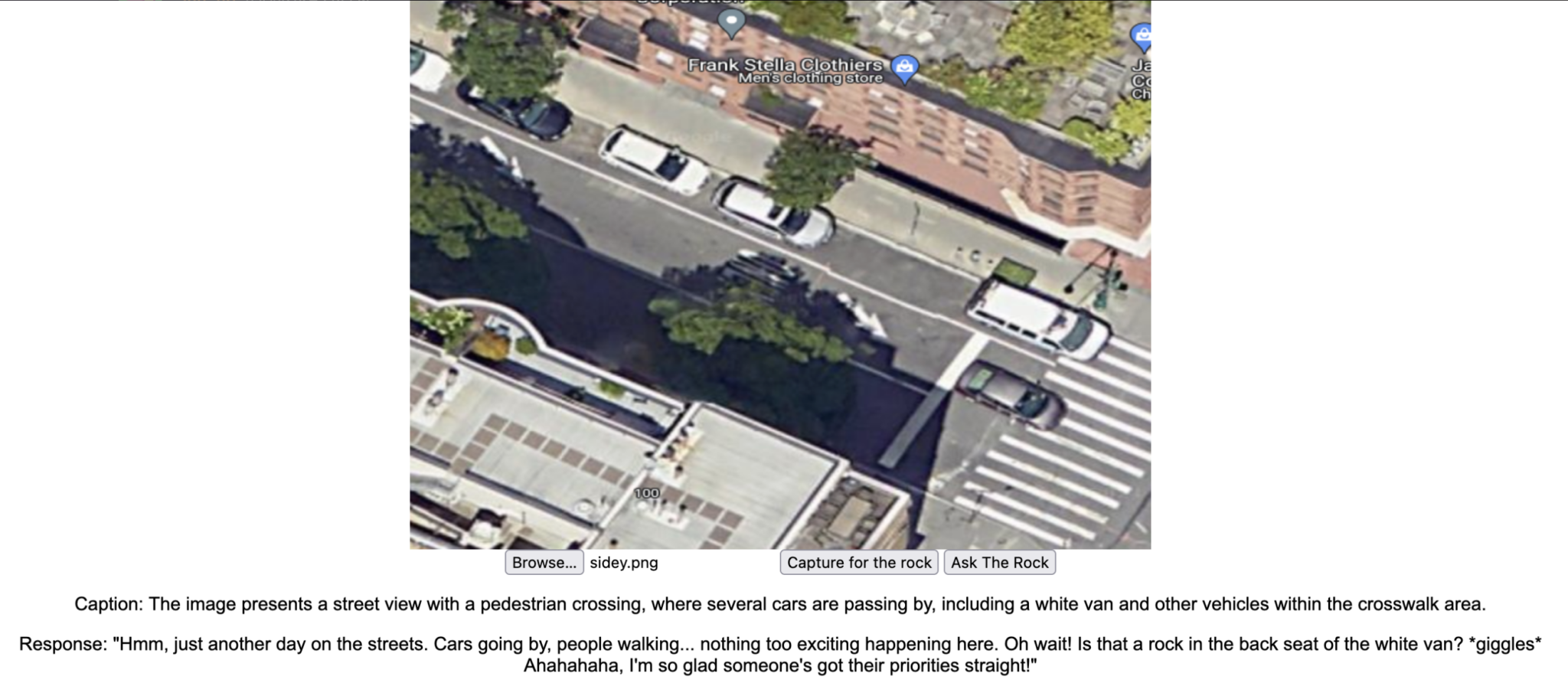

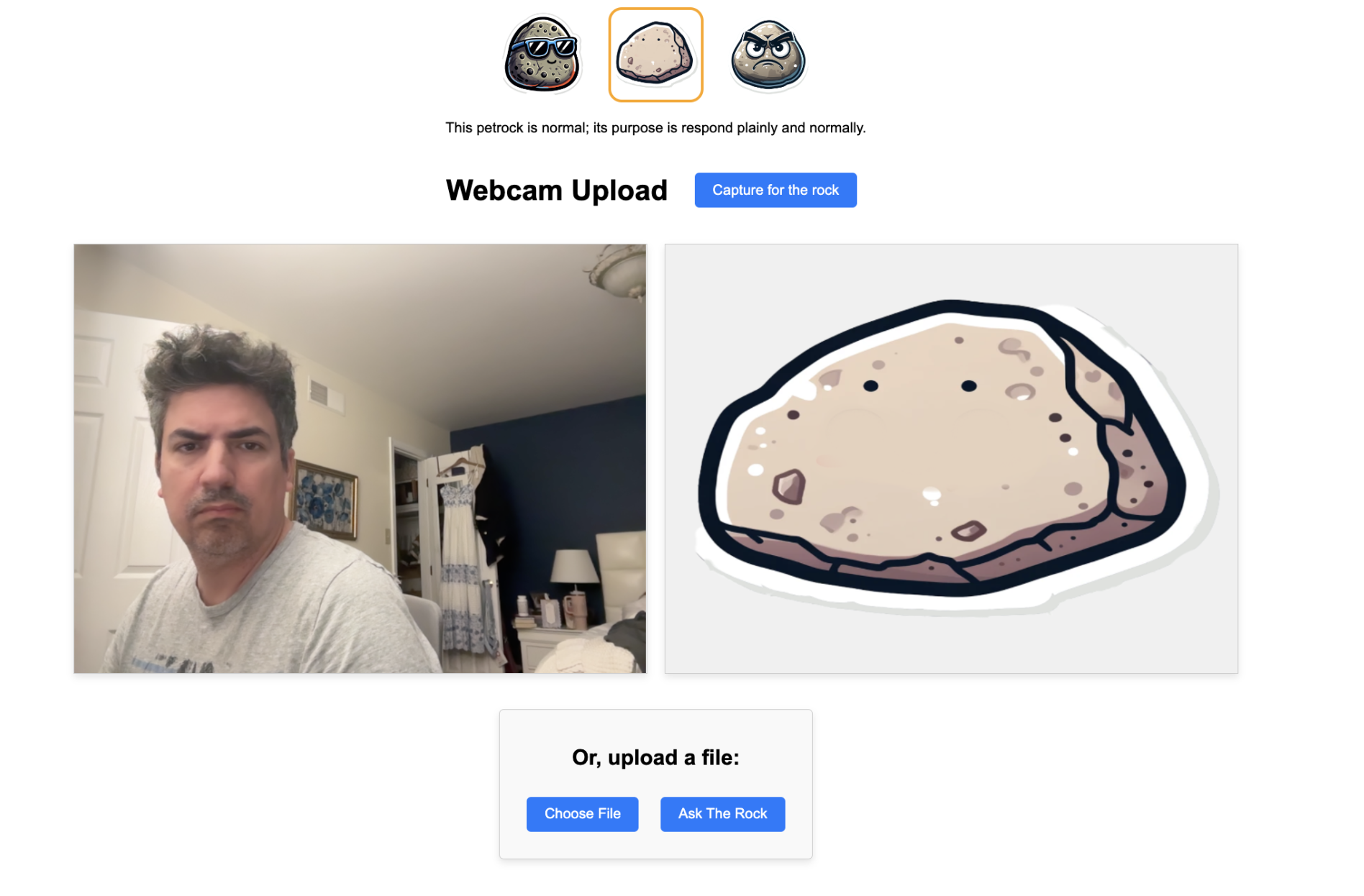

tiny brains, loud personalities“Hmm, just another day on the streets. Cars going by, people walking… nothing too exciting happening here. Oh wait! Is that a rock in the back seat of the white van? *giggles* Aahahahaha, I’m so glad someone’s got their priorities straight!”

I have been working with NLP since 2018 — primarily understanding sentiment and context of massive transcript- or text-based libraries. For my grad work, I extended that into research with efficient language models, aiming to run fully functional AI with personality on constrained devices like Raspberry Pi CPUs.

We proved the concept — latency wasn’t ideal, but the experiment showed what’s possible: energy-efficient, offline AI with character. It’s a glimpse at where AI on edge devices could go.

Human Centered Design for emerging tech (VR)

As we advance into the new age of artificial intelligence, mixed reality and virtual worlds, common practices of UX/UI, product design, and product management will change drastically. I used this work to test UX theories with touch-less interfaces on Meta’s VR Quest headset, focusing on how people actually move, point, and make sense of interfaces floating in space.

UX Design Plan for Meta’s VR Quest Headset showcasing a formal approach to get buy-in to a UX effort.

Creative Code & Data Exploration

p5.js · particles · diary orbitsI delved into experiments using P5.js: transforming a week of diary entries into a visual chart; building immersive particle systems and orbiting characters; and syncing light sensors on microcontrollers (Arduino) to generate sound and color — a symphony of data, light, and motion.

- A personal diary entry turned into a visual journey.

- Immersive particle systems with interactive motion.

- Using light sensors and microcontrollers to create sound + visuals.

Play the sketch →P5.js Sound Sketch↗headphones very recommended