ChalkNotes

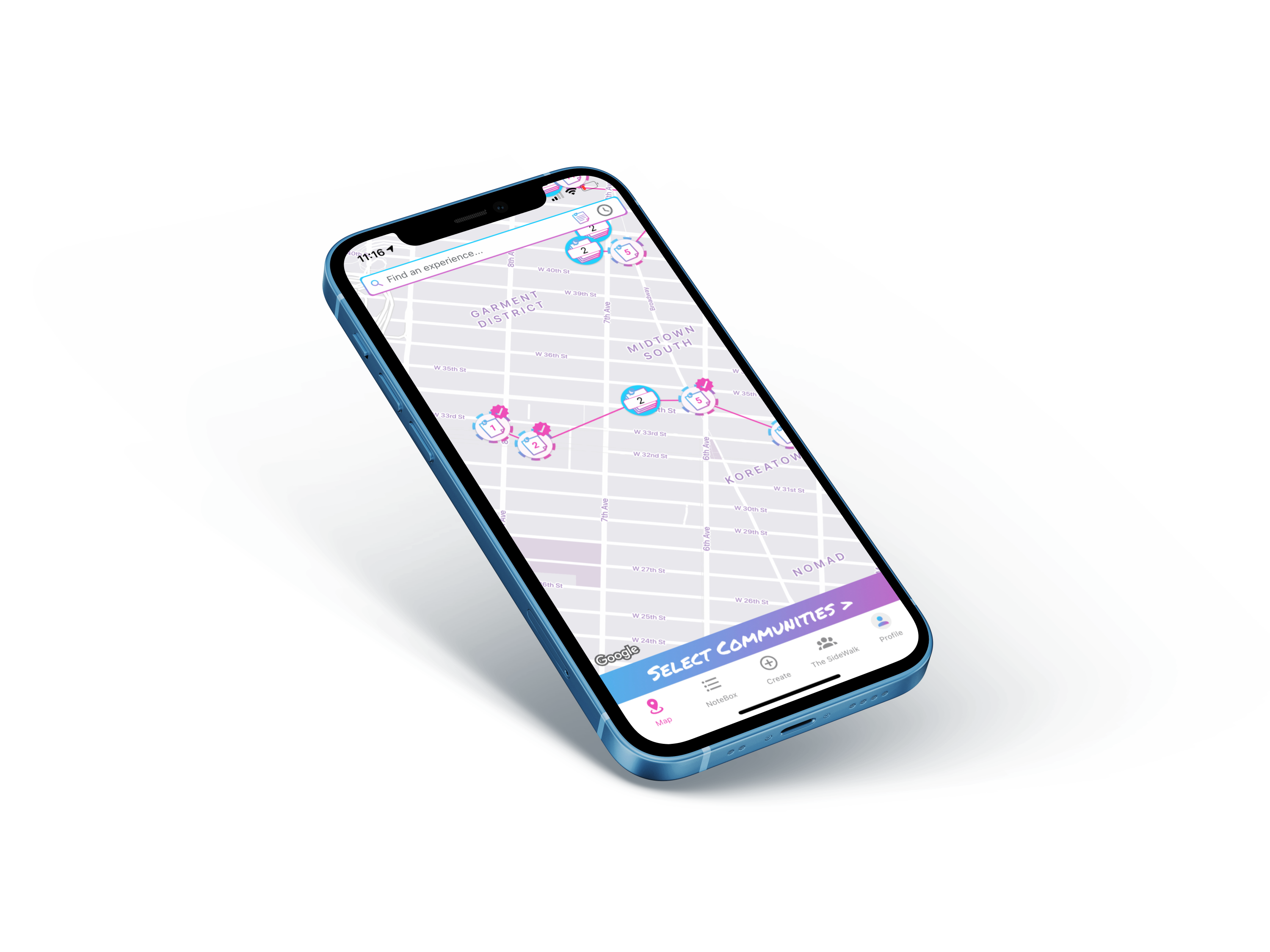

A mixed‑reality audio‑AR platform that lets creators drop stories onto real‑world maps and audiences discover them.

"Visual AR demands too much attention. The business problem was creating a 'Heads-Up' engagement model that allowed users to consume content while navigating busy city streets."

How it Works

A geospatial audio engine that triggers sound based on GPS radius, utilizing a React Native frontend and a Firebase real-time backend for creator updates. A dual-client ecosystem: a React Native mobile app for geospatial discovery and a React web dashboard for creator administration. The system relies on Firebase NoSQL for real-time authentication and state sync, integrating a web3 'XP' gamification layer to drive physical foot traffic via tokenized collectibles.

- 1Location-based audio triggers on mobile map.

- 2No-code web authoring tool for spatial story placement.

- 3Content discovery feed with experience previews.

- 4Community engagement features (sharing, reviews).

Designing the Interface

The core design challenge was 'Heads-Up' usability. I moved the primary interaction controls to the bottom third of the screen (thumb zone) and designed high-contrast, bold typography that remains legible in direct sunlight. The 'Audio Radar' visualization gave users directional feedback without requiring map literacy, solving the 'blue dot' anxiety common in GPS apps. Below is the augmented reality element activation. Instead of relying on visual markers alone, I designed an audio-centric interface where users hear spatial cues as they approach points of interest. The UI provides subtle visual feedback, but the primary interaction is auditory, allowing users to stay aware of their surroundings while engaging with content.

- 01

Validated an 'Audio-First' AR interaction model, reducing screen-time during the experience by 60%.

- 02

Shipped a 'No-Code' spatial authoring tool allowing creators to place audio without technical skills.

- 03

Solved for GPS drift by designing broad 'Audio Geofences' rather than precise visual anchors.

- 04

Delivered a seamless user experience that balanced engagement with safety in urban environments.

- 05

Codified best practices for mixed‑reality experience design in open, unpredictable environments.