NYU Grad Portfolio, Phil Olarte

Creative Technology

As part of my deep dive into the forefront of creative technology, I set out to explore the latest innovations in frontend web development. Today’s creative technologists are pushing the boundaries of what’s possible by seamlessly integrating code with both software and hardware, fundamentally reimagining how we interact with computers. The interface between humans and machines is constantly evolving, opening new dimensions of interaction. The barrier of entry are at the lowest they have ever been for those wanting to create digitally. Even with all this change one thing remains – a great experience “starts with a story”.

At my time at NYU, I focused on not losing sight of the story, and was able to find my voice as a creative technologist while looking for the future of media and entertainment. I love spectacle and play, especially when play becomes social. The next level is focusing on tech that bridges real and virtual worlds. Which ever tool I am utilizing from now on, within business or consumer products, I will continue to integrate spectacle, play, and push it to move beyond the screen.

In addition to the content below, click on these links to go deeper into my process for each of these featured projects.

Light and Control

-

Temple of Light

-

Simulate Synesthesia

-

DUI – A Data-Powered Sequence

-

Sounds of Starlight

-

Scale – Space chaos (LED art)

-

API data and the Addressable LED

Immersive Design

Interactive Augmented Reality + Generative AI

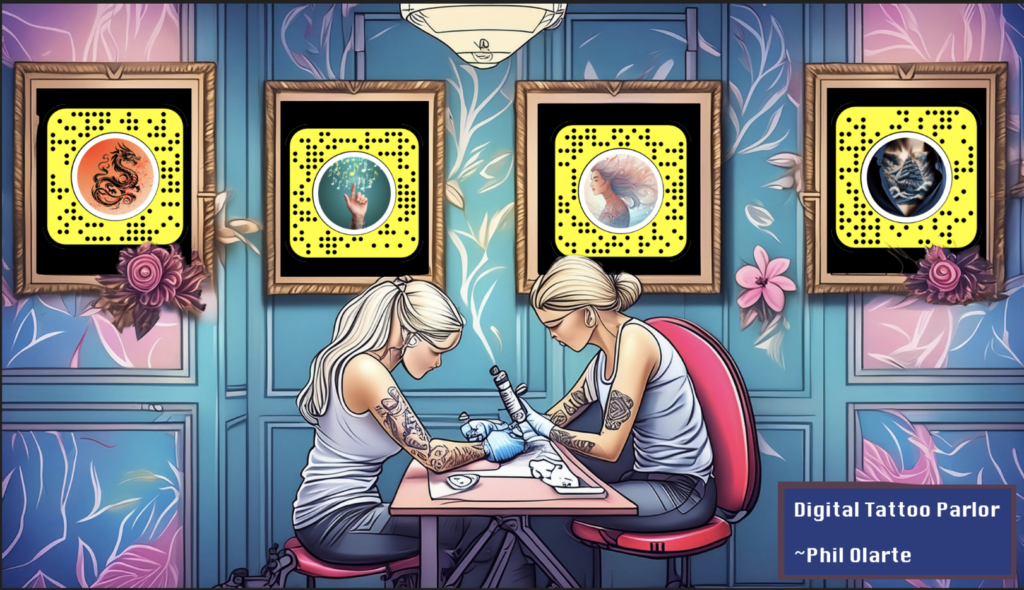

Augmented Reality (AR) is rapidly reshaping the landscape of human-computer interaction, blurring the lines between the physical and digital worlds. My journey into AR involved pushing the boundaries of what’s possible with the most cutting-edge platforms available today, including Niantic Lightship, Adobe Aero, Unreal, Snap Lens Studio, Meta Spark, and TikTok Effect House. By rigorously testing the limits of these offerings, I sought to understand and expand the potential of mixed reality experiences.

In this work, I explored the full spectrum of human-computer interaction, using the entire body as an interface for AR effects and storytelling. But it didn’t stop there—I also harnessed the power of generative AI to create a vast array of assets, from 2D images and animated videos to intricate 3D objects, all of which played pivotal roles in these projects. Generative AI is transforming the creative industries by enabling artists and technologists to focus more on concepts and ideas than the mechanics of delivery. The technology is evolving at an unprecedented pace, with text-to-video generation capabilities improving dramatically in just the past few months—surpassing even what was possible when I completed these projects six months ago.

This is an exhilarating time for small production houses, as the tools and techniques at our disposal continue to grow more powerful and accessible. The future of AR lies in its ability to transform storytelling and interaction, making them more visceral, more engaging, and more human. As we continue to explore and innovate within this space, AR will become an integral part of how we communicate, create, and connect. My work is a testament to the potential of AR and generative AI to revolutionize our world, offering glimpses into a future where the boundaries between the virtual and the real are fluid and ever-changing.

3.52 Million views (SnapChat; Custom 3D art)

336K views (SnapChat, GET user data)

16.4K views (SnapChat Gesture Tracking)

10.5K views (SnapChat Gesture Tracking)

My SnapChat Profile. Use QR codes to test these Snap AR Filters I made in Lens Studio.

Edge AI with personality - An AI PetRock

“Hmm, just another day on the streets. Cars going by, people walking…nothing too exciting happening here. Oh wait! Is that a rock in the back seat of the white van? *giggles* Aahahahaha, I’m so glad someone’s got their priorities straight!”

I have been working with NLP since 2018 – primarily understanding sentiment and context of massive transcript or grant text-based libraries. In my grad work, I took these basics and started work with LLMs.

The future of AI is leaning towards energy efficiency, emulating human behaviors, and in some very early use cases – portability; with the emergence of eLMs (Efficient Language Models) leading the way. These models are designed to reduce the size of AI parameters, making them compact enough to operate on smaller device.

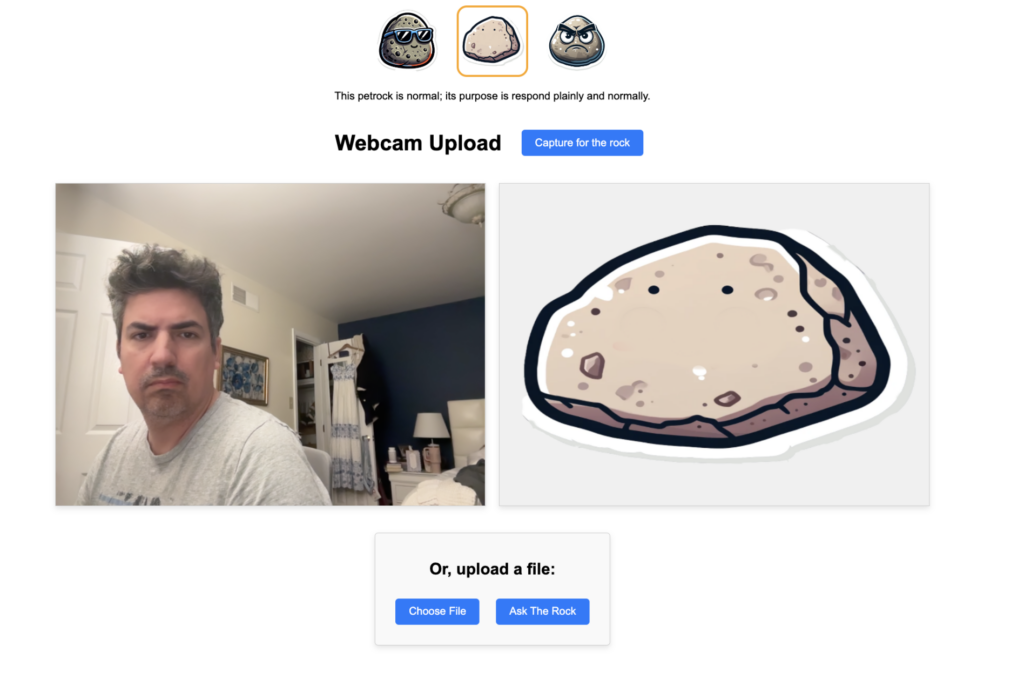

A team of us in Deep Learning class set out to answer a challenging question: Can we run a fully functional AI on a constrained device (in our case a Raspberry Pi CPU), complete with a distinct personality, without relying on an internet connection? We successfully accomplished this, even with the current hardware that wasn’t originally designed for such tasks, and the latency was less than ideal.

This is our simple UI to pick the emotion of an LLM, and choose webcam or file image input.

Given the time constraints of school, we kept it simple by making our central character a “pet rock” that did more than just sit there, but would interact with you. By combining low token language models with computer vision, we were able to upload an image into the AI, which then described what it saw with a “touch” of personality—ranging from angry to calm or neutral tones. The downside is the lag or length of time between an image input to the text output. The day we finished we already saw hardware making a play at this type of application.

There’s a growing need for more research in AI on edge devices, especially as security concerns continue to rise in the realm of IoT. Efficient, small-scale AI could offer a viable solution to these challenges, paving the way for safer and more autonomous systems.

Paper on PetRock Edge AI

AI & Computer Vision

I took a deep dive in implementing various AI models and exploring the mathematical black box of AI. This included training models from datasets, using computer vision to understand pixels on maps to predict the trajectory of static position objects obscured by other objects (“sidewalks covered by trees”), image recognition.

Human Centered Design for emerging tech (VR)

As we advance into the new age of artificial intelligence, mixed reality and virtual worlds, common practices of UX/UI, product design, and product management will change drastically. Design plans are already being created for headsets, touch-less interfaces, spacial computing, and immersive experiences.

Given my master’s focus on emerging technology I decided to use the class to test UX theories with touch-less interfaces and chose Meta’s VR Quest headset 2.

Usability Evaluation of Meta‘s Quest Horizon Feed Interface with Hand Controls

Usable prototype example made with Meta’s VR design assets.

Here is an example of a UX Design Plan for Meta’s VR Quest Headset showcasing a formal approach to get buy in to a UX effort.

Creative code with P5js

In this exploration, I delved into three fascinating topics utilizing P5.js (a javascript library): transforming

- A personal diary entry of one week into a chart to tell my story,

- crafting immersive experiences with particle systems and

- orbiting characters, and experimenting with a microcontroller (Arduino) light sensor to create a symphony of sound and color.

Controlling sound with light. Works with light sensors attached to microcontroller to control this Gregorian Chant generator.

Interaction with computers has transcended the traditional boundaries of keyboards and mice. Static landing pages are a thing of the past. We are now at the cusp of a new era where technology compels us to innovate and evolve at breakneck speeds. This is a world where our gestures, our movements, even the ambient light in a room, can be harnessed to create new forms of expression and communication. My work is a testament to the exciting possibilities that lie ahead as we continue to blur the lines between the physical and the digital, between the artist and the machine.

Sometimes a little happy thought is all you need for a fun interaction. This is two particle systems – 1 follows the main character and the other follows a character chasing after the main character.

Mouse over.

Interpreting data visually to tell a better story with data. this is about my trip from my desk in NYC to climbing the Hollywood Hills and back to my chair.

Machine Learning & Data Science

There is untold amounts of data available for analysis to identify trends in human behavior as well as scripted computer behaviors. I laid a foundation by studying data science and incorporated it into my machine learning course that taught the fundamentals of Artificial Intelligence (AI).

I evaluated multiple machine learning models ability to analyze traffic, demographic, and income data to detect which groups would theoretically be most impacted by NYC’s new congestion pricing tolls.

These data sets already existed. The next phase of cities should be to connect this data to machine learning models to predict